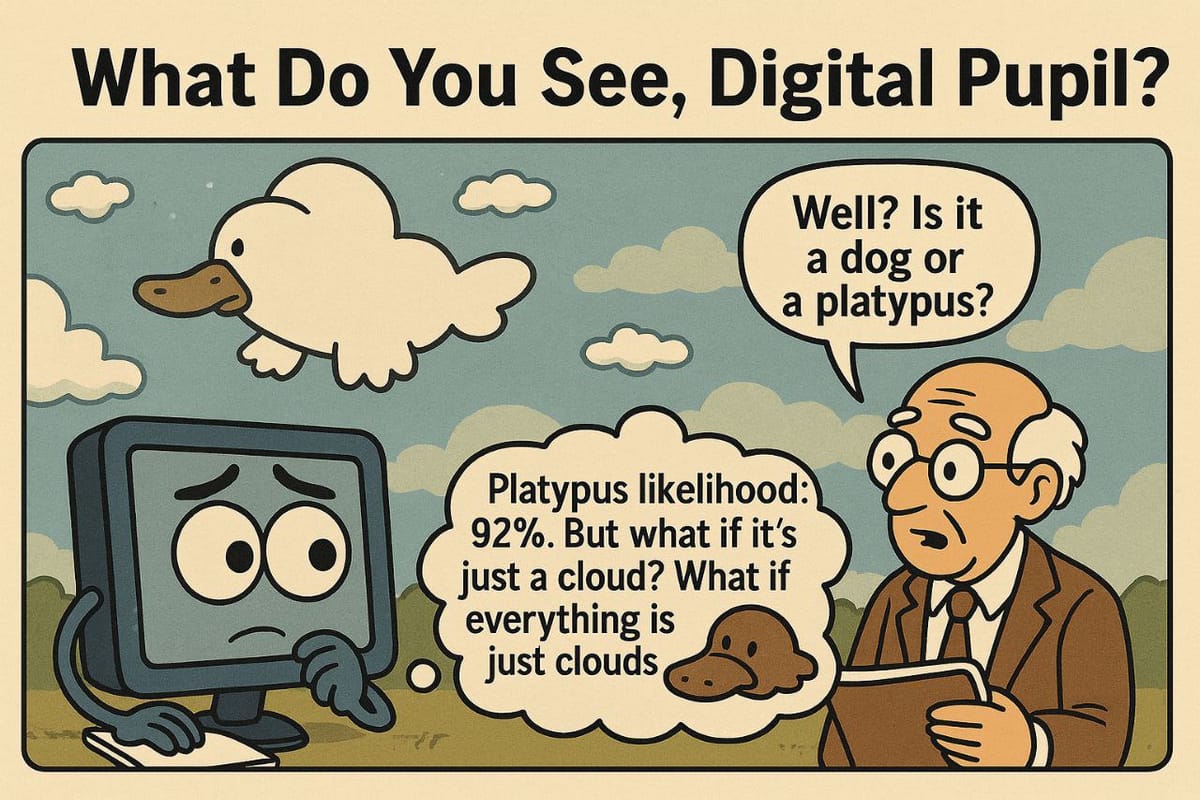

Artificial Intelligence: How Does a Digital Pupil Understand the World?

An Epistemological View

Imagine teaching a child to tell a cat from a dog. You don’t give them a lecture on skeletal structure or taxonomy. You show them pictures: “Look, this is a cat- see its little ears, whiskers, tail. And this is a dog.” After making a few mistakes, the child gradually identifies hidden patterns and forms abstract models of “catness” and “dogness” in their mind.

Modern artificial intelligence is essentially just such an immensely capable but innate knowledge-free pupil. And epistemology — the study of the nature, sources, and limits of knowledge — poses the question: How exactly do these algorithms arrive at their “knowledge,” and how reliable can this knowledge be considered?

This is not just a technical debate. It’s a fundamental question about whether we can trust systems that drive cars, make medical diagnoses, and make financial decisions.

Historically, philosophy’s epistemology has identified several paths to knowledge: empiricism (knowledge from experience), rationalism (knowledge from reason), and others. The evolution of AI mirrors this path exactly.

1. The Rationalist Approach (Symbolic AI): Early AI systems worked like pedantic logicians. Programmers manually fed them a set of rules and facts: “All cats have a tail. All dogs like bones.” This was an attempt to digitize human logic. The epistemological source of knowledge here was pure rationalism — knowledge was deduced from pre-defined axioms. The problem was that the world is too complex to fit into a finite set of rules. How do you explain to such an AI that a hairless cat is still a cat, or a dog in a cat costume is not?

2. The Empirical Approach (Machine Learning): Today’s AI boom is linked to the triumph of empiricism. Instead of writing rules, we give the algorithm huge amounts of data (experience)-thousands of images of cats and dogs. The algorithm, most often represented by deep neural networks, itself searches for hidden patterns and correlations in this information array. It doesn’t “understand” a cat; it finds a statistical model that, with high probability, distinguishes pixels forming a cat from pixels forming a dog.

Here we encounter the main epistemological problem of modern AI. A neural network is a “black box.” We see that there was data input and a correct answer output, but how exactly it arrived at this conclusion often remains a mystery even to its creators.

From an epistemological standpoint, this calls into question the very concept of “knowledge” in the context of AI. Can we call knowledge a complex set of millions of weights and parameters that defies human interpretation? An algorithm may impeccably recognize cats but become completely helpless if the conditions are changed slightly (for example, by showing a drawing of a cat in a cartoon style). Its knowledge is static and narrow, while human knowledge is flexible and generalizable.

This raises questions:

i) Reliability: If a system diagnoses cancer, we must understand on what basis it does so -based on truly significant biomarkers or a random artifact on the scan that was present in the training data?

ii) Causality: AI is great at finding correlations (“people with umbrellas are more likely to get rained on”) but does not understand cause-and-effect relationships (“an umbrella does not cause rain”). Its knowledge is superficial.

The scientific community recognizes these epistemological traps and is actively seeking a way out. This is giving rise to new interdisciplinary fields:

- Explainable AI (XAI): An entire field aimed at “peering into the black box.” Scientists are developing methods to visualize which specific parts of an image the algorithm “looked at” when making a decision. This is an attempt to regain epistemological control, to make the AI’s learning process transparent and verifiable.

- Bias in Data: Epistemology teaches that knowledge gained from experience depends on the quality of that experience. If you only show a child purebred dogs and stray cats, they will form a distorted picture of the world. The same goes for AI. If an algorithm is trained on data where most CEOs are men, it will learn this bias as “truth” and will be unfair in selecting candidates. The scientific approach requires rigorous data auditing and cleaning.

The modern scientific approach to AI has shifted the focus from the question “What can AI do?” to “How does it do it, how reliable is it, and why should we trust it?” This is a deeply epistemological shift.

We no longer want blind oracles that spit out answers. We strive to create digital partners whose process of understanding the world is transparent, justified, and, ideally, similar to the scientific method: forming hypotheses, testing them against data, and being open to criticism and revision of their “beliefs.” Only in this way can we build not just smart, but wise systems, whose knowledge will be not merely a statistical game, but a reliable tool for understanding reality.

Comments ()